Self-hosted S3 Compatible Gateway

A download can become a chargeable event for 2 times the actual file size if the gateway is running on another cloud provider. We recommend interfacing with the network directly through the Uplink CLI API or using our Getting started.

For a complete list of the supported architectures and API calls for the S3 Gateway, see S3 Compatibility.

Minimum Requirements

✅ 1 CPU

✅ 2GB of RAM

Depending on the load and throughput, more resources may be required.

To save on costs and improve performance, please see Multipart Part Size.

Dependencies

✅ Create Access Grant in CLI or Create an Access Grant

Steps:

Get and install Gateway ST

Download, unzip, and install the binary for your OS:

Configure Gateway ST

You have two ways to configure your Gateway ST:

Interactive Setup (only if it is your first setup)

Interactive Setup

1. Setup your S3 gateway by running the following command and following the instructions provided by the wizard:

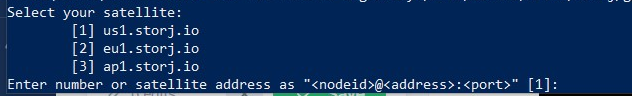

2. Enter the numeric choice or satellite address corresponding to the satellite you've created your account on.

The satellite address should be entered as <nodeid>@<address>:<port> for example: 12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs@eu1.storj.io:7777, or just use the number from the list:

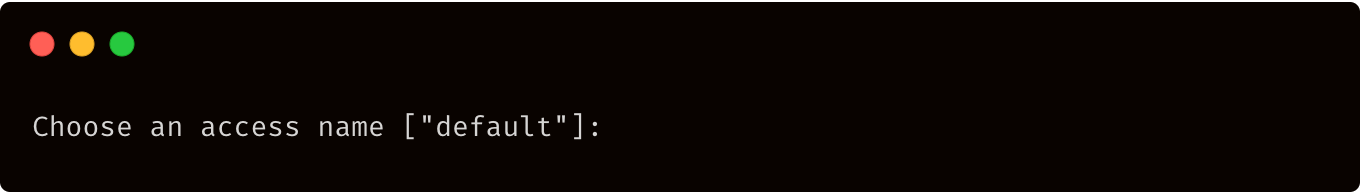

3. Choose an access name (this step may not yet be implemented in the version of S3 Gateway you are using - if you don't see this prompt, skip to step 5 below).

If you would like to choose your own access name, please be sure to only use lowercase letters. Including any uppercase letters will result in your access name not getting recognized when creating buckets.

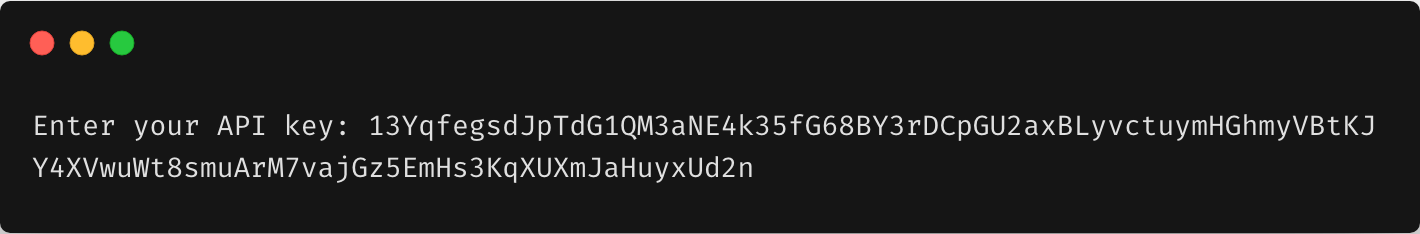

4. Enter the Create Access Grant in CLI you generated:

5. Create and confirm an encryption passphrase, which is used to encrypt your files before they are uploaded:

Please note that Storj Labs does not know or store your encryption passphrase, so if you lose it, you will not be able to recover your files.

6. Your S3 gateway is configured and ready to use!

Using an Access Grant

You can use two methods to obtain an Access Grant:

Now we got our access grant and can configure the gateway as follows:

This command will register the provided access as the default access in the gateway config file.

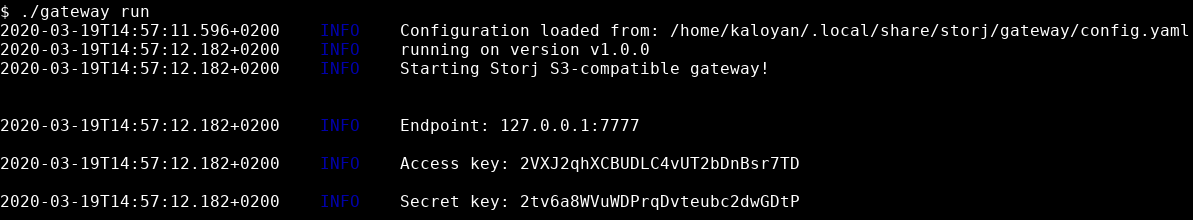

Run Gateway ST

The gateway functions as a daemon. Start it and leave it running.

The gateway should output your S3-compatible endpoint, access key, and secret key.

Configure AWS CLI to use Gateway ST

Please make sure you have AWS S3 CLI installed.

Once you do, in a new terminal session, configure it with your Gateway's credentials:

Then, test out some AWS S3 CLI commands!

See also AWS CLI Endpoint

Try it out!

Create a bucket

Upload an object

List objects in a bucket

Download an object

Generate a URL for an object

(This URL will allow live video streaming through your browser or VLC)

Delete an object

All Commands

cp - Copies a local file or S3 object to another location locally or in S3

ls - List S3 objects and common prefixes under a prefix or all S3 buckets

mb - Creates an S3 bucket

mv - Moves a local file or S3 object to another location locally or in S3.

presign - Generate a pre-signed URL for an S3 object. This allows anyone who receives the pre-signed URL to retrieve the S3 object with an HTTP GET request.

rb - Deletes an empty S3 bucket

rm - Deletes an S3 object

sync - Syncs directories and S3 prefixes. Recursively copies new and updated files from the source directory to the destination. Only creates folders in the destination if they contain one or more files

And that's it! You've learned how to use our S3-compatible Gateway. Ideally, you'll see how easy it is to swap out AWS for the Uplink, going forward.

Advanced usage

Advanced usage for the single-tenant Gateway

Adding Access Grants

You can add several access grants to the config.yaml, using this format:

You can see the path to the default config file config.yaml with this command:

Running options

You can run a gateway with specifying the access grant (or its name for example --access site) with the option --access, for example:

Running Gateway ST to host a static website

You can also run a gateway to handle a bucket as a static website.

Now you can navigate to http://localhost:7777/site/ to see the bucket site as XML or to http://localhost:7777/site/index.html to see a static page, uploaded to the bucket site.

You can publish this page to the internet, but in this case, you should run your gateway with the option --server.address local_IP:local_Port (replacelocal_IPwith the local IP of your PC andlocal_Port with the port you want to expose).

If you uselocalhost or 127.0.0.1 as your local_IP, you will not be able to publish it directly (via port forwarding for example), instead, you will have to use a reverse proxy here.

Running Gateway ST to host a static website with cache

You can use the Minio caching technology in conjunction with the hosting of a static website.

The following example uses

/mnt/drive1,/mnt/drive2,/mnt/cache1.../mnt/cache3for caching, while excluding all objects under bucketmybucketand all objects with '.pdf' extensions on a S3 Gateway setup. Objects are cached if they have been accessed three times or more. Cache max usage is restricted to 80% of disk capacity in this example. Garbage collection is triggered when the high watermark is reached (i.e. at 72% of cache disk usage) and will clear the least recently accessed entries until the disk usage drops to the low watermark - i.e. cache disk usage drops to 56% (70% of 80% quota).

Export the environment variables before running the Gateway:

Cache disks are not supported, because caching requires the atime function to be enabled.

Setting MINIO_BROWSER=off env variable would disable the Minio browser. This would make sense if running the gateway as a static website in production.